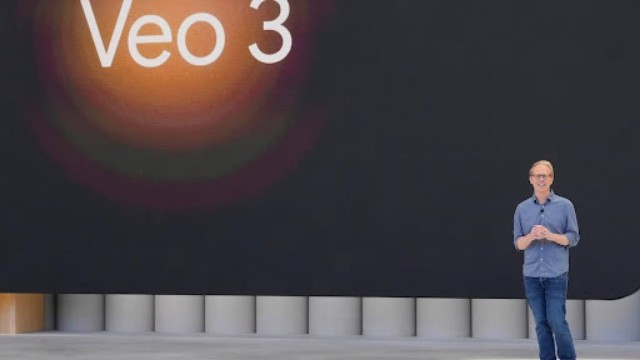

John Vennavally-Rao reports on Google's latest AI tool that can now generate short, lifelike videos with matching sound, lasting up to eight seconds.

Google’s latest AI creation, Veo 3, is taking the internet by storm—and not just for the right reasons. The tool’s video samples, now going viral, have both thrilled and alarmed viewers. One clip in particular shows a standup comedian delivering jokes so convincingly that it’s nearly impossible to tell the performance is entirely AI-made. From perfectly lip-synced punchlines to lively background noise, the video feels real.

Another jaw-dropping example features a group of characters in a musical, singing an ode to garlic bread. These short clips are turning heads not only for their creativity but for their near-perfect realism.

Unlike earlier AI video tools that created grainy or robotic animations, Veo 3 produces crisp visuals and smooth audio. It can handle everything from spoken dialogue to sound effects and background ambience. According to tech expert Carmi Levy, it’s the level of realism that sets it apart. “There are no visual signs that AI was behind these clips. It gave me chills,” he said.

Veo 3 is currently limited to users in the U.S. and only those who subscribe to Google’s $249 premium plan. Still, the reaction from early users shows that this tool marks a significant jump in AI video generation—which has only been publicly available since 2022.

Levy, while impressed, raised concerns about misuse. “This could easily fool anyone into thinking the footage is real. In the wrong hands, it’s dangerous,” he warned.

One compilation shows AI-generated faces repeatedly saying, “We can talk” and “What should we talk about?” This triggered immediate debate about whether AI tools like Veo 3 could be used to mislead or manipulate viewers.

Mark Daley, Chief AI Officer at Western University, sees Veo 3 as more than just a visual upgrade. “It doesn’t just add sound—it follows instructions with remarkable accuracy,” he said.

Google hasn’t revealed what data trained Veo 3, but many suspect it relied on YouTube videos. “AI tools need enormous amounts of data to learn, and YouTube is likely the biggest source,” Daley added.

Aengus Bridgman, who leads the Media Ecosystem Observatory, urged caution. As videos become more indistinguishable from real footage, misinformation becomes harder to detect. “The concern isn’t the fun use of AI, it’s how it could be abused to deceive,” he said.

He recommends trusting only verified sources and creators. “Stick with people or channels you know. They’re more likely to do some level of fact-checking,” he advised.

Even if you try to verify AI-made content, it’s tough. Forensic analysis may help, but it requires access to the original file and some serious computing power.

While Google promotes Veo 3 as a breakthrough for content creators, many in the entertainment industry are uneasy. Some fear that AI like this could replace actors, editors, and even directors. Levy thinks a fully AI-made film might arrive sooner than we expect.